retrowin32: redoing syscalls

This post is part of a series on retrowin32.

The "Windows emulator" half of retrowin32 is an implementation of the Windows API, which means it provides implementations of functions exposed by Windows. I've recently changed how calls from emulated code into these native implementations works and this post gives background on how and why.

Consider a function found in kernel32.dll as taken from MSDN (with some

arguments omitted for brevity):

BOOL WriteFile(

[in] HANDLE hFile,

[in] LPCVOID lpBuffer,

[in] DWORD nNumberOfBytesToWrite,

);

In retrowin32 we write a Rust implementation of it like this:

#[win32_derive::dllexport]

pub fn WriteFile(

machine: &mut Machine,

hFile: HFILE,

lpBuffer: &[u8],

) -> bool {

...

}

Suppose we have some 32-bit x86 executable that thinks it's calling the former. retrowin32's machinery connects it through to the latter — even though the implementation might be running on 64-bit x86, a different CPU architecture, or even the web platform.

Language gap

Before we get into the lower-level x86 details, you might first notice that

those function prototypes don't even take the same arguments —

nNumberOfBytesToWrite disappeared.

On the caller's side, a function call like WriteFile(hFile, "hello", 5);

creates a stack that looks like:

return address ← stack pointer points here

hFile

pointer to "hello" buffer

5

The dllexport annotation in the Rust code above causes a code generator to

generate an interop function that knows how to read various Rust types from the

stack. For example for WriteFile the code it generates looks something like

the following, which maps the pointer+length pair into a Rust slice:

mod impls {

pub fn WriteFile(machine: &mut Machine, stack_pointer: u32) -> u32 {

let hFile = machine.mem.read(stack_pointer + 4); // hFile

let lpBuffer = machine.mem.read(stack_pointer + 8); // pointer to "hello"

let len = machine.mem.read(stack_pointer + 12); // 5

let slice = machine.mem.slice(lpBuffer, len);

super::WriteFile(machine, hFile, slice) // the human-authored function

}

}

There's a bit more detail around how the return value makes it into the eax

register and how the stack gets cleaned up, but that is most of it. With these

in place, the remaining question is how to intercept when the executable is

making a DLL call and to pass it through to this implementation.

DLL calls

Emulation aside, when an executable calls an internal function it knows where that function is in memory, so it can generate a call instruction targeting the direct address. When an executable wants to call a function in a DLL, it doesn't know where in memory the DLL is, so how does it know where to call?

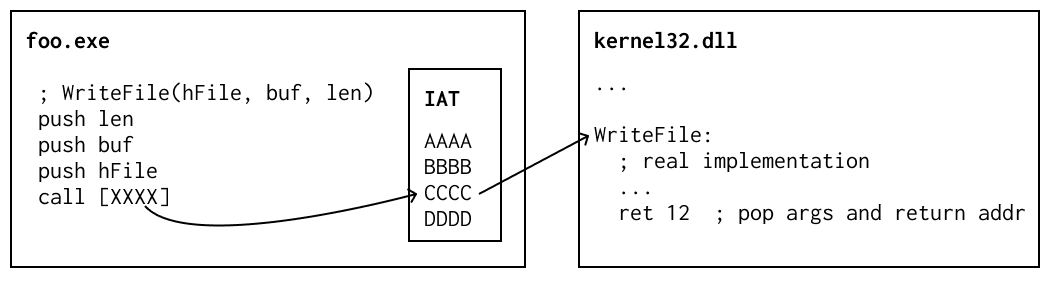

In Windows this is handled by the "import address table" (IAT), which is an array of pointers at a known location within the exectable. Each call site generates code that calls by indirecting through the IAT: instead of calling WriteFile at specific address, it calls the address found in the WriteFile entry in the IAT. At load time, each needed DLL address is written into the IAT.

(In this picture, XXXX is a constant address pointing at the IAT, while CCCC is the address written into the IAT at load time that points to the implementation of WriteFile.)

Advanced readers might ask: given that we're writing address into the IAT at load time, why is there this indirection instead of just writing the correct addresses in to the call sites? The answer is there's a complex balance between making things fast in the common case as well as in sharing memory. Windows even has an optimization that attempts to make the (mutated after loading, so typically causing a copy on write) IATs amenable to being shared across process instances.

retrowin32, before

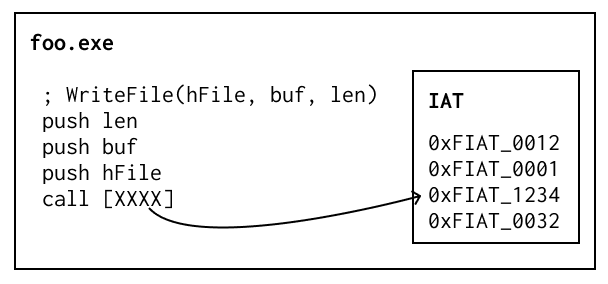

In retrowin32's case we just needed some easy way to identify these calls. So retrowin32 poked easy to spot magic numbers into the IAT.

The emulator's code then looks like:

const builtins = [..., WriteFile, ...]

fn emulate() {

if instruction_pointer looks like 0xFIAT_xxxx {

builtins[xxx].call()

} else {

...emulate code found at instruction_pointer...

}

}

This worked fine for a long time! But it had a few important problems that became apparent over time.

- In "native" x86-64 mode we don't get to intercept all calls to our magic addresses, and rather need IAT calls to point to real x86 code. This required a significantly different code path.

- The Windows

LoadLibrary()call gives you a pointer to the real base address where a DLL was loaded. An executable I was trying to get to run was callingLoadLibrary()and was attempting to traverse the various DLL file headers found at that pointer. Because retrowin32 didn't actually load real libraries, these didn't exist. - DLLs can expose data other than functions. For example, DirectDraw exports

vtables, and

msvcrt.dllexports plain variables like ints. - Machinery like the debugger that can single-step or show memory would get

confused by these magic invalid addresses. In the above arrangement, there

isn't an obvious place to put a breakpoint to break on calls to

WriteFile(). - The core emulation loop, the most performance-sensitive code, needs to repeatedly check if we've hit one of these magic addresses. (Things are arranged such that it runs once per basic block and not once per instruction, at least.)

retrowin32, now

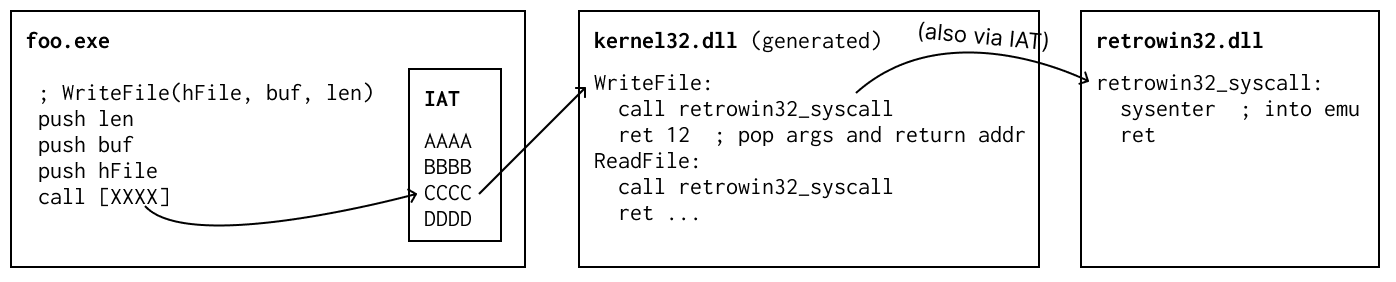

The shared root cause of some of the above issues is that executables just expect real DLLs to be found in memory. (Pretty much every problem bottoms out as Hyrum's Law.) I could make retrowin32 attempt to construct all the appropriate layout at runtime, but ultimately we can match DLL semantics best by creating real DLLs for system libraries and loading them like any other DLL.

However, all these DLLs need to do is hand control off to handlers within retrowin32. For this I changed retrowin32's code generator to generate trivial snippets of x86 assembly which compile into real win32 DLLs as part of the retrowin32 build. (My various detours through cross-compiling finally paying off!) retrowin32 needs to be able to load DLLs anyway to load DLLs found out in the wild, and by using a real DLL toolchain to construct these I guarantee the system DLLs have all of the expected layout.

I can then bundle the literal bytes of the DLLs into the retrowin32 executable such that they can be loaded when needed without needing to shuffle files around; they are a few kilobytes each.

(Coincidentally, the DREAMM emulator, a much more competent Windows emulator, recently made a similar change for pretty similar reasons, which makes me more confident this is the right track.)

Each stub function needs to trigger the retrowin32 code that handles builtin

functions.

On x86-64 that code is especially tricky.

To make this swappable, these stub functions all call out to known shared

function, yet another symbol that retrowin32 can swap out for the different use

cases. They can find a reference to that symbol using exactly the DLL import

machinery again with a magic ambient retrowin32.dll.

Under emulation retrowin32_syscall can point at some code that invokes the x86

sysenter instruction, which is arbitrary but easy for an emulator to hook. On

native x86-64 we can instead implement it with the stack-switching far-calling

goop.

Finally, in this picture, how does retrowin32_syscall know which builtin was

invoked? At the time the sysenter is hit, the return address is at the top of

the stack, so with some bookkeeping we can derive that it was Writefile from

that address.

Vtables

COM libraries like DirectDraw expose vtables to executables: when you call e.g.

DirectDrawCreate you get back a pointer to (a pointer to) a table of functions

within DirectDraw. For the executable to be able to read that pointer, it has to

exist within the emulated memory, which means previously I had some macro soup

that knew to allocate a bit of emulated memory to shove some function pointers

into it when the DLLs were used.

After this change, I can just expose the vtables as static data from the DLLs, like this:

.globl _IDirectDrawClipper

_IDirectDrawClipper:

.long _IDirectDrawClipper_QueryInterface

.long _IDirectDrawClipper_AddRef

.long _IDirectDrawClipper_Release

.long _IDirectDrawClipper_GetClipList

.long _IDirectDrawClipper_GetHWnd

...

Building DLLs

To generate these stub DLLs, I currently generate assembly and run it through

the clang-cl toolchain, which seamlessly cross-compiles C/asm to win32. It

feels especially handy to be able to personally bug one of the authors of it for

my tech support, thanks again Nico!

It feels a bit disappointing to need to pull in a whole separate compiler

toolchain for this, especially given that Rust contains the same LLVM bits. I

spent a disproportionate amount of time tinkering with alternatives, like

perhaps using one of the other assemblers coupled with the lld-link embedded

in Rust, or even using Rust itself, but I ran into

a variety of small problems with that.

Probably something to revisit later.

Dynamic linking

Any time I tinker in this area (and also looking at Raymond Chen's post, also linked above) I always find myself thinking about how much dang machinery there is to make dynamic linking work and to try to fix up the performance of it.

It makes me think of a mini-rant I heard Rob Pike give about dynamic linking (it was clearly a canned speech for him, surely a pet peeve) and how much Go just attempts to not do it. I know the static/dynamic linking thing is a tired argument, I understand the two sides of it, and I understand that engineering is about choosing context specific tradeoffs, but much like with minimal version selection it is funny how clear it is to me that dynamic linking is just not the technology to choose unless forced. Introspecting, I guess this just reveals something to me about my own engineering values.

Appendix: dllimport calls

The above omitted a lot of details, sorry pedants! As penance here is one small detail I ratholed on that might be interesting for you; non-pedants, feel free to skip this section.

In the stub DLLs I first generated code like:

WriteFile:

call _retrowin32_syscall

ret 12

To make it all link, at link time I provided a retrowin32.lib file that lets

the linker know that _retrowin32_syscall comes from retrowin32.dll.

I expected that to generate a call via the IAT, but the code it generated went through one additional indirection. That is, the resulting machine code in the DLL looked like:

WriteFile:

call _retrowin32_syscall ; e8 rel32

...

_retrowin32_syscall:

jmp [IAT entry for retrowin32_syscall] ; ff25 addr

Why did it do this? At least in

this blog's explanation, when

compiling the initial assembly into an object file, the assembler doesn't know

whether retrowin32_syscall is a local symbol that will be resolved at link

time or if it's a symbol coming from a DLL. So it assumes the former and

generates a rel32 call to a local function.

Then, at link time, the linker sees that it actually needs this call to resolve

to an IAT reference, and so it generates this extra bit of code containing the

jmp (which ghidra labels a "thunk").

To eliminate this indirection I instead generate assembly that looks like:

WriteFile:

call [__imp__retrowin32_syscall]

where that __imp_-prefixed symbol is somehow understood to refer directly to

the IAT entry. At the level of C code this is related to the dllimport

attribute, but I am not sure whether that attribute exists at the assembly

level. Peeking at the LLVM source I see it actually has some special-casing

around symbols beginning with the literal string "__imp_", yikes.