How accelerated rendering works in Chrome/Mac

Today's post is a guest post from Nico Weber, who did so much good work on Chrome in his 20% time I didn't realize he wasn't fulltime on the project until it was announced that he joined.

Browsers are racing to add "Hardware accelerated rendering" to their feature checklists. As usual, this means different things in different browsers. In Chrome, the near-term plan is to accelerate CSS 3d transforms and WebGL.

Chrome's renderer process is sandboxed, so it can't access the graphics card directly. To remedy this, a new process is introduced: The GPU process. The GPU process talks to the GPU and keeps all GPU-related state (modelview matrix, textures, what have you).

CSS 3d Transforms

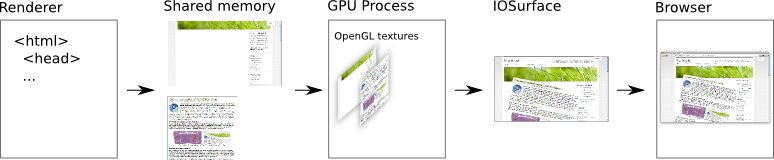

When a page uses CSS 3d transforms, the renderer renders the contents of the layer with the to-be-transformed content as usual into memory and then sends it to the GPU process (via shared memory), which keeps it in a texture. The GPU process then composites all the layer textures, using the current transform of every layer, and writes the result into an IOSurface the size of the tab contents (an IOSurface is a OS X primitive that represents a piece of GPU memory that can be shared across processes). Since the textures and the surface are all in GPU memory, this is a GPU->GPU copy, so this is fast. The GPU process then notifies the browser process, which takes the same IOSurface and renders it to the screen.

When e.g. the rotation angle of a layer is changed, the renderer only needs to send the updated angle to the GPU process, and recompositing can happen completely on the graphics card.

CoreAnimation plugins

For historical reasons, plugins using the CoreAnimation drawing model also send their contents to the browser in an IOSurface. For correct compositing, plugins would need to send them to the GPU process, which would then composite them with the other layers. The browser shouldn't need to do anything to draw such plugins. That's not implemented yet, and until then css transforms on plugins won't work.

Video playback

Longer term, the GPU process will also do hardware accelerate decoding of movie frames, as far as I understand. The movie container parsing will stay in the renderer, this wants to be sandboxed. The flow will be: raw data from the network arrives in browser -> sent to renderer -> splits it into raw video frames sent to gpu process -> gpu process does hardware accelerated decoding into a texture -> gpu composites that texture with its other textures into the IOSurface -> browser draws IOSurface. This might be somewhat inaccurate, I'm not too familiar with how video works.

WebGL

A WebGL texture upload looks similar: The encoded image arrives over the network in the browser -> sent to renderer for decompression -> uncompressed data sent to GPU process -> GPU process uploads image data into a texture. Since textures are usually uploaded only once and after that referenced by ID, that's not quite as terrible as it sounds.

When the renderer wants to execute a WebGL command, say

glUniform4f(), it just calls that function. Through a shim

library, the function call is serialized into something called a

command buffer, which is then sent to the GPU process and executed.

Aside: Comparison with Safari

Safari currently does not have a separate sandboxed renderer process: HTML rendering and UI live in the same process, and this process can access the GPU directly. This means that Safari can upload the rendered HTML directly into a CALayer (roughly the same as an OpenGL texture) and let CoreAnimation composite all layers directly onto the screen. This saves a gpu->gpu copy.

For WebGL, Safari can call out to OpenGL directly, without having to ship all GL commands to another process.